Autoscaling in a Cloud – Best Practices

Today in this article, we will learn various Autoscaling in a Cloud and their best practices.

Autoscaling in the cloud refers to the ability to automatically adjust the number of compute resources, such as virtual machines or containers, based on the current demand or workload.

It is a feature provided by cloud service providers that allows you to scale your infrastructure resources up or down dynamically, without the need for manual intervention.

Autoscaling helps cloud-based systems maintain optimal performance, increases cost-effectiveness, and raises overall reliability.

To make the most of this functionality and guarantee that your applications can properly handle changing workloads, it’s crucial to use autoscaling best practices.

We’ll go into great detail about cloud autoscaling best practices in this extensive book, covering diverse implementation, monitoring, optimization, and typical difficulties.

With autoscaling, you can define scaling policies or rules that determine when and how to scale your resources. These policies are typically based on metrics like,

- CPU utilization

- network traffic

- Custom application-specific metrics.

- Memory utilization

- Latency

Today in this article, we will cover a few very important best practices for autoscaling,

- Monitor and Set Metrics:

- Right-Sizing Instances

- Use Scaling Triggers

- Implement Scaling Policies – Horizontal Vs Vertical

- Implement Health Checks

- Test and Monitor Scaling

- Consider Cost Optimization

- Evaluate Load Balancing

- Define Scaling Triggers

- Capacity Planning

- Combining Autoscaling with Autohealing

- Scaling Across Multiple Regions

- Autoscaling for Serverless Architectures

Monitor and Set Metrics:

Define meaningful metrics to monitor your application’s performance and workload.

These metrics can include CPU utilization, memory usage, network traffic, or custom application-specific metrics.

Set appropriate thresholds for scaling actions based on these metrics.

Right-Sizing Instances

Optimize the size of your instances to match the workload requirements.

Undersized instances may result in performance issues, while oversized instances can lead to unnecessary costs.

Regularly review and adjust the instance types and sizes based on workload patterns and performance metrics.

Use Scaling Triggers

Identify the appropriate scaling triggers for your application.

These triggers can include a predefined schedule, specific metrics reaching threshold values, or custom events.

For example, you might scale up during peak hours or when CPU utilization exceeds a certain threshold.

Implement Scaling Policies – Horizontal Vs Vertical

Determine scaling policies based on your application’s needs.

These policies can include adding or removing instances based on the scaling triggers.

Consider both horizontal scaling (adding or removing instances) and vertical scaling (increasing or decreasing the resources of existing instances) based on the specific requirements of your application.

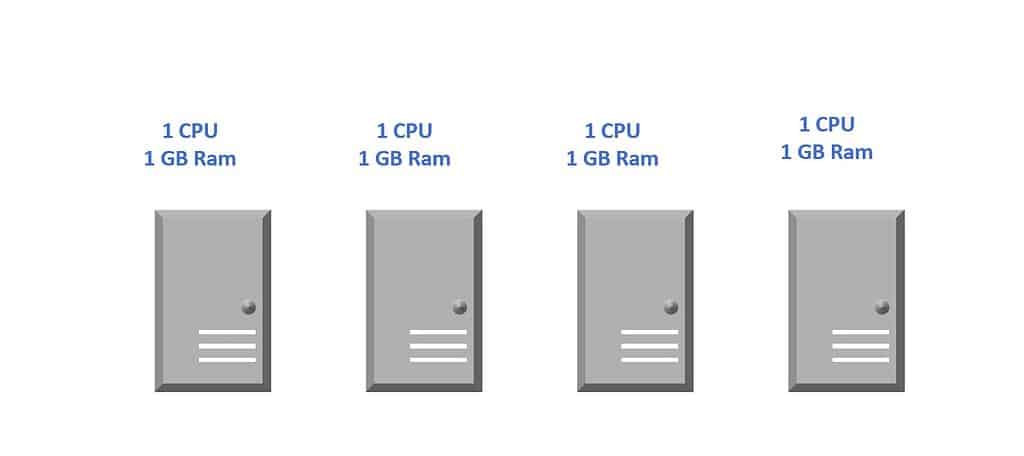

Horizontal Scaling

Horizontal scaling, also known as scaling out, is a cloud computing concept that involves adding more instances (virtual machines or containers) to handle the increased demand for an application or service.

It is a fundamental component of cloud scalability and is used to improve performance, availability, and fault tolerance in cloud-based systems.

In horizontal scaling, additional instances are deployed in parallel to distribute the workload across multiple nodes.

Each instance runs the same application code, and incoming requests or tasks are distributed evenly among these instances using load-balancing techniques.

As the demand for the application grows, more instances are added to handle the increased load, and as the demand decreases, excess instances can be removed to save costs and resources.

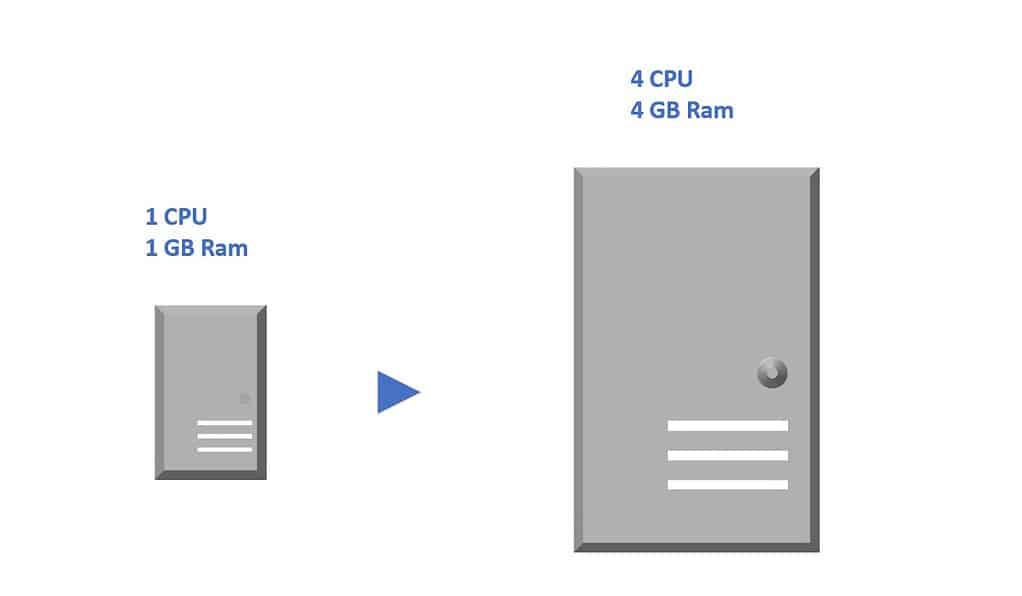

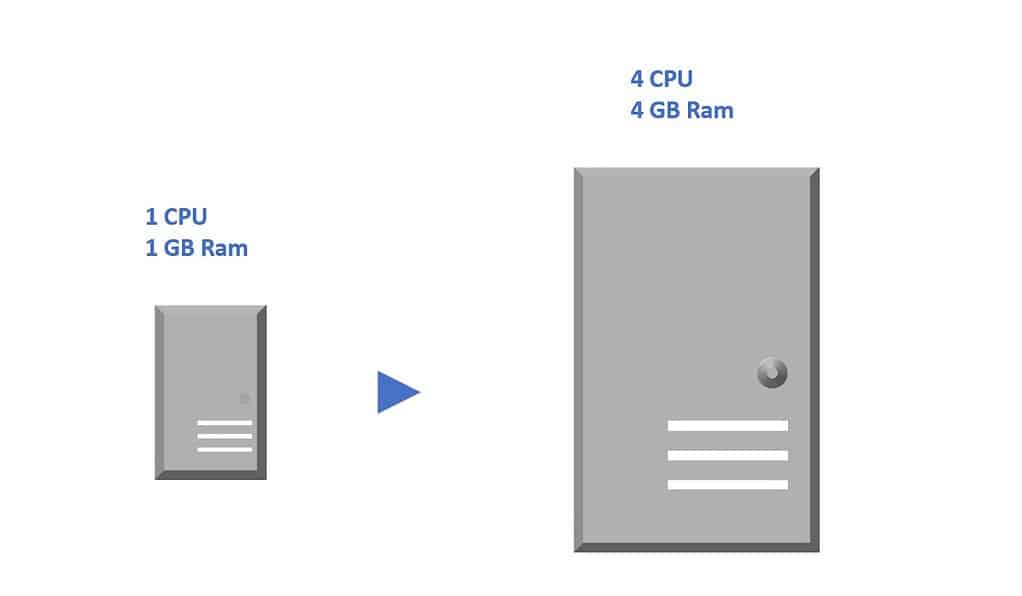

Vertical Scaling

Vertical scaling, also known as scaling up, is a cloud computing concept that involves increasing the capacity of individual instances (virtual machines or containers) to handle higher workloads. Unlike horizontal scaling, which adds more instances to distribute the workload, vertical scaling focuses on increasing the resources, such as CPU, RAM, or storage, of a single instance to meet growing demands.

In vertical scaling, the existing instance is upgraded or resized to accommodate increased performance requirements. For example, the instance’s CPU might be upgraded from 2 cores to 4 cores, or its RAM might be increased from 4GB to 8 GB. This allows the single instance to handle a larger workload without the need to manage multiple instances.

Implement Health Checks

Configure health checks to ensure that only healthy instances are added to the auto-scaling group.

Health checks can verify the application’s responsiveness or the availability of critical services.

Unhealthy instances can be automatically terminated and replaced with healthy ones.

Test and Monitor Scaling

Test your scaling configurations and policies to ensure they work as expected.

Monitor the scaling activities, observe the impact on performance, and make adjustments if necessary.

Regularly review and analyze scaling events to fine-tune your autoscaling settings.

Consider Cost Optimization

While autoscaling is beneficial for managing workload fluctuations, it’s important to keep an eye on costs.

Optimize costs by setting upper and lower limits on the number of instances and selecting cost-effective instance types.

Use cost monitoring and analysis tools provided by the cloud provider to identify potential cost-saving opportunities.

Evaluate Load Balancing

Implement load balancing in conjunction with autoscaling to distribute traffic across multiple instances.

This ensures that the load is evenly distributed and the scalability of your application is maximized.

Utilize load-balancing services provided by the cloud provider or third-party solutions.

Example: Elastic Load Balancer (ELB): Its load balancing service provided by cloud providers that automatically distributes incoming traffic across multiple instances and works seamlessly with autoscaling.

ELBs help ensure that traffic is evenly distributed and can scale along with the instances.

Define Scaling Triggers

Define Events or conditions that trigger the autoscaling system to add or remove instances.

These triggers can be predefined schedules, specific metric thresholds, or custom events.

Capacity Planning

Perform capacity planning by forecasting and determining the appropriate capacity or resources needed to handle expected workloads.

Autoscaling plays a vital role in capacity planning by dynamically adjusting resources based on actual demand.

Example of Cloud Provider Autoscaling Services

- Amazon Web Services (AWS)- Amazon EC2 Auto Scaling

- Microsoft Azure- Azure Autoscale

- Google Cloud Platform- Google Cloud Autoscaler.

These services provide the necessary tools and APIs to configure and manage autoscaling for their respective platforms.

Combining Autoscaling with Autohealing

Autohealing mechanisms, such as detecting and replacing unhealthy instances, complement autoscaling by ensuring that the application remains robust and reliable.

Scaling Across Multiple Regions

Implementing multi-region autoscaling ensures high availability and redundancy, especially in geographically distributed applications.

Autoscaling for Serverless Architectures

In serverless platforms, autoscaling mechanisms differ from traditional virtual machine-based autoscaling. Understanding the scaling behaviors of serverless functions and containers is crucial for optimizing performance.

By following these best practices, you can ensure efficient and effective autoscaling in your cloud environment, allowing your application to handle varying workloads while optimizing performance and costs, maintain cost efficiency, and provide excellent user experiences, meeting the dynamic demands of modern cloud computing environments.

Do you have any comments or ideas or any better suggestions to share?

Please sound off your comments below.

Happy Coding !!

Please bookmark this page and share it with your friends. Please Subscribe to the blog to receive notifications on freshly published(2024) best practices and guidelines for software design and development.

Please bookmark this page and share it with your friends. Please Subscribe to the blog to receive notifications on freshly published(2024) best practices and guidelines for software design and development.